Blog

-

Studies Uncover Hidden Brain Pathways of Communication

5 Nov , 2015

5 Nov , 2015

New studies from the Perelman School of Medicine at the University of Pennsylvania (Penn Medicine) have uncovered hidden brain pathways of communication and clarified how crucial features of audition are managed by the brain.

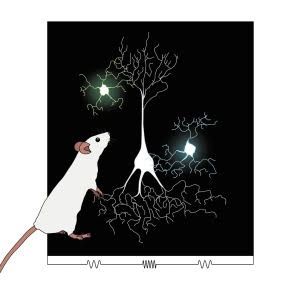

In a study article from Penn Medicine, published online in an October edition of eLife, Maria N. Geffen, PhD, an assistant professor in Penn’s departments of Neuroscience and Otorhinolaryngology and Head and Neck Surgery, discovered how different neurons work together in the brain to reduce responses to frequent sounds, and enhance responses to rare sounds.

In their article, Geffen and her co-authors explain that when navigating through complex acoustic environments, we hear both important and unimportant sounds, and an essential task for our brain is to separate out the important sounds from the unimportant ones.

“In everyday conversations, you want to be able to carry on a discussion, yet simultaneously perceive when someone else calls your name,” Geffen said. ”Similarly, a mouse running through the forest wants to be able to detect the sound of an owl approaching even though there are many other, more ordinary sounds around him. It’s really important to understand the mechanisms underlying these basic auditory processes, given how much we depend on them in everyday life.”

Previously, researchers have found that a perceptual phenomenon known as “stimulus-specific adaptation” in the brain might help with this complex task, and this feature of perception occurs across all our senses. In the context of hearing, it is a reduction of auditory cortical neuron responses to frequently-heard or expected sounds in a given environment. This desensitization to expected sounds reportedly creates a heightened sensitivity to unexpected sounds—which is desirable because unexpected sounds often carry extra significance.

Although this phenomenon has been previously studied, there have been limited tools available to examine the function of specific cell types in stimulus-specific adaptation. In the Penn Medicine study, Geffen’s team used recently discovered optogenetics techniques, which enable a given type of neuron to be switched on or off at will with bursts of laser light delivered to a lab mouse’s brain through optical fibers.

The team found that two major types of cortical neurons provided two separate mechanisms for this type of adaptation. Both types are inhibitory interneurons, which lessen and otherwise modulate the activity of the main excitatory neurons of the cortex. The researchers found that one type of interneurons, somatostatin-positive interneurons, exert much more inhibition on excitatory neurons during repeated, and therefore, expected tones. The other tested type of interneurons, parvalbumin-positive interneurons, inhibit responses to both expected and unexpected tones—but in a way that also enhances stimulus-specific adaptation.

By recording the activity during the presentation of test tones, the research team was able to craft a detailed model of how these different interneuron types are wired to their excitatory neuron targets, and how the stimulus-specific adaptation phenomenon emerges from this network. Geffen and her team now plan to use their optogenetics techniques to study how the manipulation of these interneurons affects mice’s behavioral responses to expected and unexpected sounds.

In a second study at Penn Medicine, outlined in an article in the Journal of Neurophysiology last month, Geffen and members of her laboratory identified an important general principle for brain organization that allows us to detect a word pronounced by different speakers. Depending on who pronounces the same word, the resulting sound can have very different physical features: for example, some people have higher pitched voices or talk slower than others. Our brain is ultimately able to determine that the two different sounds represent the same underlying word.

This is a problem that speech recognition software has been grappling with for many years, say the authors, and a solution is to find a representation of the word that is invariant to the acoustic transformations. Invariant representation refers to the brain’s general ability to perceive an object as that object, despite considerable variation in how the object is presented to the senses–an ability that can be greatly diminished in people with hearing aids or cochlear implants.

In their study, Geffen and colleagues were able to show, using their model of mammalian audition, that neurons in a higher auditory processing area of the rat brain, the supra-rhinal auditory field, varied significantly less in their responses to distorted rat vocalizations, compared to neurons in the primary auditory cortex, which receives relatively raw signals from the inner ear. Geffen and colleagues plan to extend this line of research to determine whether a similar brain mechanism enables hearing and recognition of speech in the presence of noise.